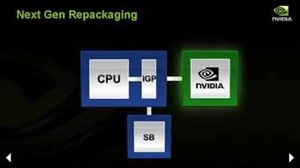

CPUs and GPUs shouldn't share die space

Both AMD and Intel have talked about integrating their IGPs onto the CPU die in the future and it wasn't long before Huang jumped on this, painting a picture of mixing something that's fantastic with something that's flawed and unsuitable for what's required.He used an analogy of mixing decanted bottle of a great wine like a '63 Chateau Latour with a '07 bottle of Robert Mondavi. In other words, mixing the two together should be avoided because one will bring down the quality of the other. Huang finished his deconstruction of this strategy by saying "it's hard to stay modern on both fronts" and it's hard to disagree with that statement if you're a gamer.

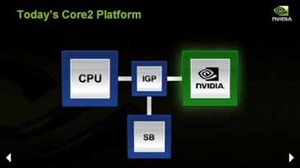

Nvidia's CEO was quick to point out that he loves Intel CPUs – and he thinks they're some of the best (if not the best) in the business. He pushed the idea of both CPU and GPU working together quite heavily in a heterogeneous computing model. The picture he painted was one where both are critical in a modern computer and pressed the point that hardware should be used for the task it's designed for home to analysts.

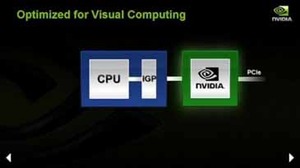

Nvidia has been talking about “optimised” PCs for a while now, where you have a CPU that’s fast enough and a graphics card that’s as fast as possible. Of course, this is convenient for Nvidia to recommend being a graphics or visual computing company, but if you’re a gamer it makes a lot of sense to spend more on your graphics card than your CPU because that's what will improve your gaming experience. To an extent, Intel believes the opposite is true because it wants to sell you the fastest processor possible and there are benefits to having a faster CPU – but for a gamer, the benefits just aren’t as profound as Intel would hope. However, the chip giant has never denied to bit-tech that gamers should purchase a discrete graphics card if they want to play the latest games.

The bizarre thing is the contradictory comments coming from Fosner, who says that discrete graphics will no longer be required in the future. Like Huang, I believe that is never going to happen unless PC gaming was to mysteriously disappear into a time warp – and I can’t see that happening any time soon, even when you take into account some of the problems that PC gaming faces today.

What’s more, Intel wouldn’t have said that Larrabee is a discrete graphics product if it didn’t believe there was a market for its products. It seems to me that Intel is pushing out pre-Larrabee propaganda to rubbish current discrete GPUs by saying they are no longer required... then when Larrabee is released, all of a sudden Intel will change its tune and say discrete graphics products are the dogs dangly bits. We’ve seen it before from ATI on Shader Model 3.0... and then DirectX 10 when it realised it was going to be late to market. And we’ve also seen it from Nvidia when it came to unified shader architectures. No surprises here.

And while we’re talking about Larrabee, Nvidia's honcho had his own two penneth to add to the debate surrounding what to us is an interesting technological development—regardless of whether it succeeds or fails. “We’re going to open a can of whoop ass on that,” said Huang.

"There are some people who are waiting for us to talk about Larrabee," he later added. "A collection of microprocessors, some texture filtering units and some new instructions somehow will wipe out the... I don't even know how many tens of thousands of man years of research around visual computing. The fact of the matter is we are in the process of reinventing ourselves all the time. We start this every three or four years and we're right now in the curve of what we call computational graphics. With each one of these innovation curves, we carry all of the benefits from the past because there a lot of applications out there."

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.